How to secure meetings with funders on LinkedIn

Michelle Benson, Founder of Culture of Philanthropy, shares her four-step strategy for how to get the best out of LinkedIn.

Is your charity using LinkedIn to generate new business leads and steward/retain your existing donors?

Or are you just using it to look up cold prospects, so you can send them cold messages or connection requests. While your existing donors get the odd thank you post for attending events or making a donation.

Most charities do NOT have an intentional strategy on how to get the best out of LinkedIn.

I’m Michelle Benson, I teach fundraisers, CEOs, Comms Teams and Charity Consultants how to use LinkedIn strategically, and I’ll be opening this year’s Fundraising Now Conference on Thursday 6 February as the keynote speaker.

I’ll be walking you through why LinkedIn offers charities (of any size) a unique opportunity to warm up a large funding audience. Additionally, I’ll be going through my four-step process on how to use LinkedIn to secure meetings with funders, you would struggle to engage offline.

Why LinkedIn?

The big difference between getting meetings with funders offline versus online is:

- Offline you have to approach funders either cold or via someone else (an introduction from a Trustee for example). There isn’t a chance (that you control) to get to know them before you approach them directly.

- Online you can get to know people in the news feed before you approach them or they approach you – so when you do send that message or they come to you, you are no longer a cold caller.

LinkedIn gives you an opportunity to warm up a very large group of funders, so you have plenty of people to approach. Or have come to you, in a simple and straightforward manner for free (if you know what you are doing).

It also offers a number of unique stewardship opportunities.

When you are on LinkedIn is your news feed full of posts from other charities and fundraisers? If so when you post what makes you think your posts will be seen by funders? If you’re on the platform and you’re not seeing funders’ posts they are not seeing anything from you either.

I teach fundraisers how to change their news feed so when you go on LinkedIn your news feed is full of funders – so you can like and comment and get to know people. That is how people start to come to you, or you get known among hundreds of funders.

Most people have a two-step process for LinkedIn – find funders then message them. And wonder why this strategy doesn’t work. Or consider 2 responses out of 100 a success.

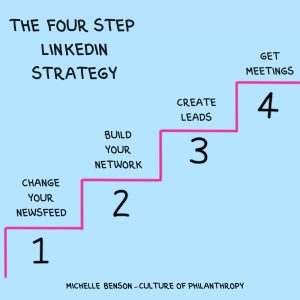

My four-step LinkedIn strategy

- Change your news feed so you see funders and they see you

- Build up (and warm up) your network with or without posting content

- Create leads as a warm caller never a cold one – which means you are always a welcome guest and not an unwanted pest

- Book meetings

I’ll be walking through the opportunities LinkedIn offers compared to offline fundraising and how to avoid the common pitfalls that land most fundraisers and CEOs in an echo chamber with lots of other charities – rather than being able to get to know all the funders you cannot get in front of offline.

If you would like to learn more tips and tricks on how to get the best out of LinkedIn, you can follow me on LinkedIn here.

Innovation, Impact and Inspiration – DSC’s annual Fundraising Now conference returns for 2025!

Fundraising Now is your one-stop conference for fundraising innovation, impact and inspiration. By strategically embracing innovation, you can ensure your charities missions resonate deeply, securing the support you need in a rapidly evolving world.

Join us for a day of expert-led sessions, practical workshops, and networking opportunities that will inspire and empower you to achieve the impact your charity needs in 2025 and beyond.